Home • Conference • News • Resources • Science • History • Autism • Bookshelf • Membership • Contact Us

D.A.T.A.: Free Data Collection App for iPhone

The Direct Assessment Tracking Application (D.A.T.A.) is designed to allow users to precisely measure how often and how long events occur over time. The application can be used to conveniently and inconspicuously collect data on a wide range of events in a variety of settings.

The Direct Assessment Tracking Application (D.A.T.A.) is designed to allow users to precisely measure how often and how long events occur over time. The application can be used to conveniently and inconspicuously collect data on a wide range of events in a variety of settings.

"A Day of Great Illumination: B. F. Skinner' s Discovery of Shaping," by Gail Peterson.

"A Day of Great Illumination: B. F. Skinner' s Discovery of Shaping," by Gail Peterson.

Why wasn't behavior shaping discovered earlier in history? Animal training, using methods specifically tailored the animal and the target behavior had been practiced for thousands of years. Yet, it was not until the middle of the 20th century that generic teaching techniques were discovered. The discovery of shaping in 1943 led Skinner to a better understanding of the processes of verbal behavior, emphasizing function over topography.

B.F. Skinner demonstrates shaping.

What is Delay Discounting?

Delay Discounting is the decrease in the current value of a reward based on the delay getting it. Basically, because we devalue delayed rewards, a dollar now may be worth as much to us as $5 later. In a classic 1972 study, "Committment, Choice and Self-Control," Rachlin and Green found that pigeons preferred 2 seconds of immediate access to food over 4 seconds delayed by only 4 seconds.

The formula for calculating delay discounting is:

Discount = A/(1 + k•D)

"A" is the immediate value of the reward. "k" is a constant sometimes called "impulsivity." "D" is the delay. Higher k values mean the organism is more likely to take a small immediate reward over a larger delayed reward.

Amy Odom reviews the delay discounting/impulse control literature in her 2011 article: "Delay Discounting: I'm a k, You're a k."

Archive of the Journal of the Experimental Analysis of Behavior

Archive of the Journal of the Experimental Analysis of Behavior

View the contents of all back issues from 1958-2012.

What is the Matching Law?

First described in detail in a1961 article by Richard Herrnstein, matching law says that responses are distributed among alternatives in proportion to the relative amounts reward obtained on each alternative. If you get 1/3 of your reward on behavior A, and 2/3 on B, you will devote 1/3 of your effort to A and 2/3 to B.

Classic Article on Motivation: Rearranging the Motivative, Reinforcing, and Discriminative Functions of Stimuli.

In 1970, Joseph Mendelson, a physiological psychologist at the University of Kansas, reported a curious finding involving motivation and reinforcement. Specifically, he found that rats would manipulate their own deprivation level when the appropriate reward was present.

Nine rats had received brain electrodes that would cause drinking when activated. Specifically, the electrodes were in an area of the lateral hypothalamus known to control drinking (and sometimes reinforcement). What the electrode did was simulate strong water deprivation.

However, when Mendelson reversed the usual situation, allowing the rats to press a lever to stimulate themselves, seven of the nine would press, but only if water was available. The remaining two pressed more often when water was present*. In everyday terms, the rats would make themselves thirsty, but only if they had something to drink.

Mendelson explained these findings in terms of the physiology of the lateral hypothalamus. For behavior analysts, the situation becomes one of figuring out which events are reinforcers, discriminative stimuli, and motivative operations. In practical terms, however, Mendelson showed that organism will behave to increase their own deprivation state in the presence of a relevant reinforcer.

*It is likely that the electrodes in these rats also had a reinforcing function.

Reference:

Mendelson, J. (1970). Self-induced drinking in rats: The qualitative identity of drive and reward systems in the lateral hypothalamus. Physiology and Behavior, 5(8), 925-926.

Why do we get pauses after reinforcement on fixed schedules?

We get pauses after reinforcement on fixed schedules because the delivery of the reinforcer is a signal that the probability of reinforcement is at its lowest. That is, the reinforcer is also a discriminative stimulus. On a fixed-interval one-minute schedule, for instance, the food delivery signals that no food is going to come for a minute no matter what the organism does. Thus, the stimuli associated with other reinforcers, no matter how insignificant, can come to control the behavior. Instead of pressing the lever the rat explores or grooms for a while. In a classroom, a teacher who delivers reinforcers at predictable intervals is teaching the children that getting praise means that it will be a while before praise will come again, thus inducing the kids to look for other rewards.

Pseudoscience Alert: Facilitated Communication is Back

Facilitated Communication (FC) is back and growing in popularity. People are once again being falsely accused of rape and abuse. One of the latest cases continues here in Michigan. FC is appearing in new and different forms in schools and colleges. FC advocates are once again being given lavish attention by gullible members of media and given pubic and private financing for their dangerous and scientifically discredited pseudoscience.

A technical examination of Rosemary Crossley and Chris Borthwick's charges of fraud against Frontline and documentary producer Jon Palfreman.

Discrimination Training Sequence Enables Horses to Pick Their Own Blankets in Cold Weather.

By selectively associating different blanket weights with different symbols, and rewarding correct choices, horses were taught to pick their own blankets.

By selectively associating different blanket weights with different symbols, and rewarding correct choices, horses were taught to pick their own blankets.

Writing in The Horse, Christa Lesté-Lasserre reports: Using a simple series of easily distinguishable printed symbols, Mejdell’s group taught 23 horses to associate symbols with certain actions. The horses learned that one symbol meant “blanket on,” another meant “blanket off,” and a third meant “no change.” Once the horses had learned the meanings (which took an average of 11 days), the researchers gave them free rein to choose symbols and rewarded them with food for their selection, regardless of which symbol they chose.

A common problem in blanket choice for horses is figuring out whether the horse is actually comfortable. This procedure would be an immediate solution. However, research on impulsivity predicts that the horses will make short-term choice, and that the blanket they want at the moment might not be suitable for conditions later.

Everyone seems fascinated by the pigeons B.F. Skinner taught to play a version of ping-pong. But, that was the not the most important part of his article, "Two 'Synthetic' Social Relations." It was just the introduction.

Everyone seems fascinated by the pigeons B.F. Skinner taught to play a version of ping-pong. But, that was the not the most important part of his article, "Two 'Synthetic' Social Relations." It was just the introduction.

Basically, if you teach a couple of pigeons to peck at a ping-pong ball, and then put them on opposite sides of a small table, they will "play" ping-pong. That was a big deal at the time because the hand shaping of behavior was new. But, conceptually there aren't a lot of implications.

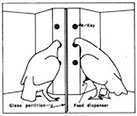

The second part of the article is the one to really pay attention to. What Skinner did was set up a contingency that required two pigeons separated by a pane of glass to peck corresponding keys at the same time. One pigeon spontaneously became a "leader," looking for which one of three keys produced food. The other pigeon, the "follower," came to mirror the behavior of the leader very closely, and the two seemed to be mirror images. This behavior generalized, and the pigeons would seem to mirror other behaviors as well. The follower pigeon showed the ability to engage in new forms of behavior simply by seeing them in the leader.

Why is this very important? Skinner had demonstrated how sophisticated generalized imitation can be established quickly with just contingent reward for imitating a relatively simple response. If a bird can do it, why not a child?

Behavior Analysis Association of Michigan, Department of Psychology, Eastern Michigan University, Ypsilanti, MI 48197